Interactive Video Object Segmentation?

The semi-supervised case assumes the user inputs a full mask of the object of interest. We believe that it makes sense to explore the interactive scenario, where the user gives iterative refinement inputs to the algorithm.How are you going to evaluate that?

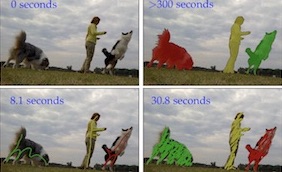

The main idea is that our servers will simulate human interaction in the form of scribbles. A participant will connect to our servers and receive a set of scribbles, to which they should reply with a video segmentation. The servers will register the time spent and the quality of the results, and then reply with a refinement scribble. We consider two metrics for the challenge:- We compute the Area Under the Curve (AUC) of the plot Time vs Jaccard. Each sample in the plot is computed considering the average time and the average Jaccard obtained for the whole test-dev for a certain interaction. If the user is not able to compute all the interactions, the values from the last valid interactions will be used for the following ones.

- We interpolate the previous Time vs Jaccard plot at 60 seconds to obtain a Jaccard value. This evaluates which quality a method can obtain in 60 seconds for a sequence containing the average number of objects in test-dev of DAVIS 2017 (~3 objects).

You can find more information here and in the the paper below.

How do I participate?

We have released a Python package to simulate the human interaction, you can find more information here.How do I submit?

You don't have to submit the results anywhere, the result of the interactions are logged in the server by just using the Python package that we provide. Once all the interactions have been done, the server will check which is your best submission and update the leaderboard accordingly.When?

Local evaluation of the results is now available (train and val subsets). The evaluation using test-dev against the server is available from 13th May 2018 23:59 UTC to 1st June 2018 23:59 UTCWhy teaser?

The technical challenges behind this track make us be cautious about this first edition, so we launch in beta mode.So why participate?

Despite not being a full-fledged competition, if results are interesting, we will treat them with the same honors as those in the main challenge (poster and associated paper) and you get to be the among the first participants in the track.Prizes

The top submission will receive a subscription to Adobe CC for 1 year.Leaderboard By AUC

| Position | Participant | Session ID | AUC | J@60s |

| 1 | Seoung Wug Oh (Yonsei University) | e61a0983 | 0.641 | 0.647 |

| 2 | Mohammad Najafi (University of Oxford) | 7fad0704 | 0.549 | 0.379 |

| 3 | Alex Lin (BYU) | eff8bf8e | 0.450 | 0.230 |

| 4 | Zilong Huang (HUST) | 3d260255 | 0.328 | 0.335 |

| 5 | Scribble-OSVOS (Baseline) | ad8f10c6 | 0.299 | 0.141 |

| 6 | Kate Rakelly (UC Berkeley) | de1bcfd3 | 0.269 | 0.273 |

Leaderboard By Jaccard@60s

| Position | Participant | Session ID | AUC | J@60s |

| 1 | Seoung Wug Oh (Yonsei University) | c2f7410a | 0.641 | 0.647 |

| 2 | Mohammad Najafi (University of Oxford) | 88a2d08d | 0.527 | 0.395 |

| 3 | Zilong Huang (HUST) | 3d260255 | 0.328 | 0.335 |

| 4 | Kate Rakelly (UC Berkeley) | de1bcfd3 | 0.269 | 0.273 |

| 5 | Alex Lin (BYU) | 95d8f1f7 | 0.417 | 0.240 |

| 6 | Scribble-OSVOS (Baseline) | 6ab8d261 | 0.196 | 0.153 |

Citation

The 2018 DAVIS Challenge on Video Object Segmentation

S. Caelles,

A. Montes,

K.-K. Maninis,

Y. Chen,

L. Van Gool,

F. Perazzi, and

J. Pont-Tuset

arXiv:1803.00557, 2018

[PDF] [BibTex]

@article{Caelles_arXiv_2018,

author = {Sergi Caelles and Alberto Montes and Kevis-Kokitsi Maninis and Yuhua Chen and Luc {Van Gool} and Federico Perazzi and Jordi Pont-Tuset},

title = {The 2018 DAVIS Challenge on Video Object Segmentation},

journal = {arXiv:1803.00557},

year = {2018}

}

The 2017 DAVIS Challenge on Video Object Segmentation

J. Pont-Tuset,

F. Perazzi,

S. Caelles,

P. Arbeláez,

A. Sorkine-Hornung, and

L. Van Gool

arXiv:1704.00675, 2017

[PDF] [BibTex]

@article{Pont-Tuset_arXiv_2017,

author = {Jordi Pont-Tuset and Federico Perazzi and Sergi Caelles and Pablo Arbel\'aez and Alexander Sorkine-Hornung and Luc {Van Gool}},

title = {The 2017 DAVIS Challenge on Video Object Segmentation},

journal = {arXiv:1704.00675},

year = {2017}

}

A Benchmark Dataset and Evaluation Methodology for Video Object Segmentation

F. Perazzi,

J. Pont-Tuset,

B. McWilliams,

L. Van Gool,

M. Gross, and

A. Sorkine-Hornung

Computer Vision and Pattern Recognition (CVPR) 2016

[PDF] [Supplemental] [BibTex]

@inproceedings{Perazzi2016,

author = {F. Perazzi and J. Pont-Tuset and B. McWilliams and L. {Van Gool} and M. Gross and A. Sorkine-Hornung},

title = {A Benchmark Dataset and Evaluation Methodology for Video Object Segmentation},

booktitle = {Computer Vision and Pattern Recognition},

year = {2016}

}