Definition

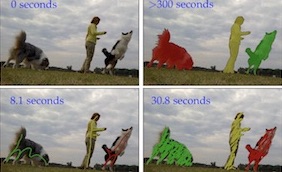

The interactive scenario assumes the user gives iterative refinement inputs to the algorithm, in our case in the form of a scribble, to segment the objects of interest. Methods have to produce a segmentation mask for that object in all the frames of a video sequence taking into account all the user interactions.More information in the DAVIS 2018 publication and the Interactive Python package documentation.

Dates and Phases

- Test: 27th April 2020 23:59 UTC - 15th May 2020 23:59 UTC.Prizes

All the participants invited to the workshop will get a subscription to Adobe CC for 1 year.Evaluation

In this edition, we use J&F instead of only Jaccard. Moreover, users can submit a list of frames where they would like to have the new interaction instead of considering all the frames in a video (behaviour by default), more information here.The main idea is that our servers simulate human interactions in the form of scribbles. A participant connects to our servers and receives a set of scribbles, to which they should reply with a video segmentation. The servers register the time spent and the quality of the results, and then reply with a set of refinement scribbles. We consider two metrics for the challenge:

- We compute the Area Under the Curve (AUC) of the plot Time vs J&F. Each sample in the plot is computed considering the average time and the average J&F obtained for the whole test-dev for a certain interaction. If the user is not able to compute all the interactions, the values from the last valid interactions will be used for the following ones.

- We interpolate the previous Time vs J&F plot at 60 seconds to obtain a J&F value. This evaluates which quality a method can obtain in 60 seconds for a sequence containing the average number of objects in test-dev of DAVIS 2017 (~3 objects).

Local evaluation of the results is available for train and val sets. The evaluation using test-dev against the server is available during the Test 2019 period. In both cases, please use the Interactive Python package.

Datasets (Download here, 480p resolution)

- Train + Val: 90 sequences from DAVIS 2017 Semi-supervised. The scribbles for these sets can be obtained here.- Test-Dev 2017: 30 sequences from DAVIS 2017 Semi-supervised. Ground truth not publicly available, unlimited number of submissions. The scribbles are obtained interacting with the server.

Feel free to train or pre-train your algorithms on any other dataset apart from DAVIS (Youtube-VOS, MS COCO, Pascal, etc.) or use the full resolution DAVIS annotations and images.

Submission

We have released a Python package to simulate the human interaction, you can find more information here. You don't have to submit the results anywhere, the result of the interactions are logged in the server by just using the Python package that we provide. Once all the interactions have been done, the server will check which is your best submission and update the leaderboard accordingly.More information on how to install the Python package can be found here.

Papers

- Right after the challenge closes (15th May) we will invite all participants to submit a short abstract (400 words maximum) of their method (Deadline 19th May 23:59 UTC).- Together with the results obtained, we will decide which teams are accepted at the workshop. Date of notification 20th May.

- Accepted teams will be able to submit a paper describing their approach (Deadline 4th June 23:59 UTC). The template of the paper is the same as CVPR, but length will be limited to 4 pages including references.

- Papers will also be invited to the workshop in form of oral presentation or poster.

- Accepted papers will be self-published in the web of the challenge (not in the official proceedings, although they have the same value).

Other considerations

- Each entry must be associated to a team and provide its affiliation.- The best entry of each team will be public in the leaderboard at all times.

- We will only consider the "interactive" scenario: only supervision provided by the scribbles that the server sends is allowed. Although we have no way to check the latter at the challenge stage, we will make our best to detect it a posteriori before the workshop.

- We reserve the right to remove one of the entry methods to the competition when there is a high technical similarity to methods published in previous conferences or workshops. We do so in order to keep the workshop interesting and to push state of the art to move forward.

- The new annotations in this dataset belong to the organizers of the challenge and are licensed under a Creative Commons Attribution 4.0 License.

Citation

The 2018 DAVIS Challenge on Video Object Segmentation

S. Caelles,

A. Montes,

K.-K. Maninis,

Y. Chen,

L. Van Gool,

F. Perazzi, and

J. Pont-Tuset

arXiv:1803.00557, 2018

[PDF] [BibTex]

@article{Caelles_arXiv_2018,

author = {Sergi Caelles and Alberto Montes and Kevis-Kokitsi Maninis and Yuhua Chen and Luc {Van Gool} and Federico Perazzi and Jordi Pont-Tuset},

title = {The 2018 DAVIS Challenge on Video Object Segmentation},

journal = {arXiv:1803.00557},

year = {2018}

}

The 2017 DAVIS Challenge on Video Object Segmentation

J. Pont-Tuset,

F. Perazzi,

S. Caelles,

P. Arbeláez,

A. Sorkine-Hornung, and

L. Van Gool

arXiv:1704.00675, 2017

[PDF] [BibTex]

@article{Pont-Tuset_arXiv_2017,

author = {Jordi Pont-Tuset and Federico Perazzi and Sergi Caelles and Pablo Arbel\'aez and Alexander Sorkine-Hornung and Luc {Van Gool}},

title = {The 2017 DAVIS Challenge on Video Object Segmentation},

journal = {arXiv:1704.00675},

year = {2017}

}

A Benchmark Dataset and Evaluation Methodology for Video Object Segmentation

F. Perazzi,

J. Pont-Tuset,

B. McWilliams,

L. Van Gool,

M. Gross, and

A. Sorkine-Hornung

Computer Vision and Pattern Recognition (CVPR) 2016

[PDF] [Supplemental] [BibTex]

@inproceedings{Perazzi2016,

author = {F. Perazzi and J. Pont-Tuset and B. McWilliams and L. {Van Gool} and M. Gross and A. Sorkine-Hornung},

title = {A Benchmark Dataset and Evaluation Methodology for Video Object Segmentation},

booktitle = {Computer Vision and Pattern Recognition},

year = {2016}

}